Synopsys and NVIDIA Double Down on Acceleration

$2b Investment from NVIDIA and Joint Development Agreement

Engineering has become a compute problem. Everything from chips to cars to industrial systems now depends on simulation, verification and modelling tools that push straight into the limits of traditional computer hardware performance. The simulation tools, multiphysics engines, and electronic design automation sit behind nearly every modern product. But everyone wants to do more, faster. If you can do that, there are better opportunities for customers, engagement, and productivity.

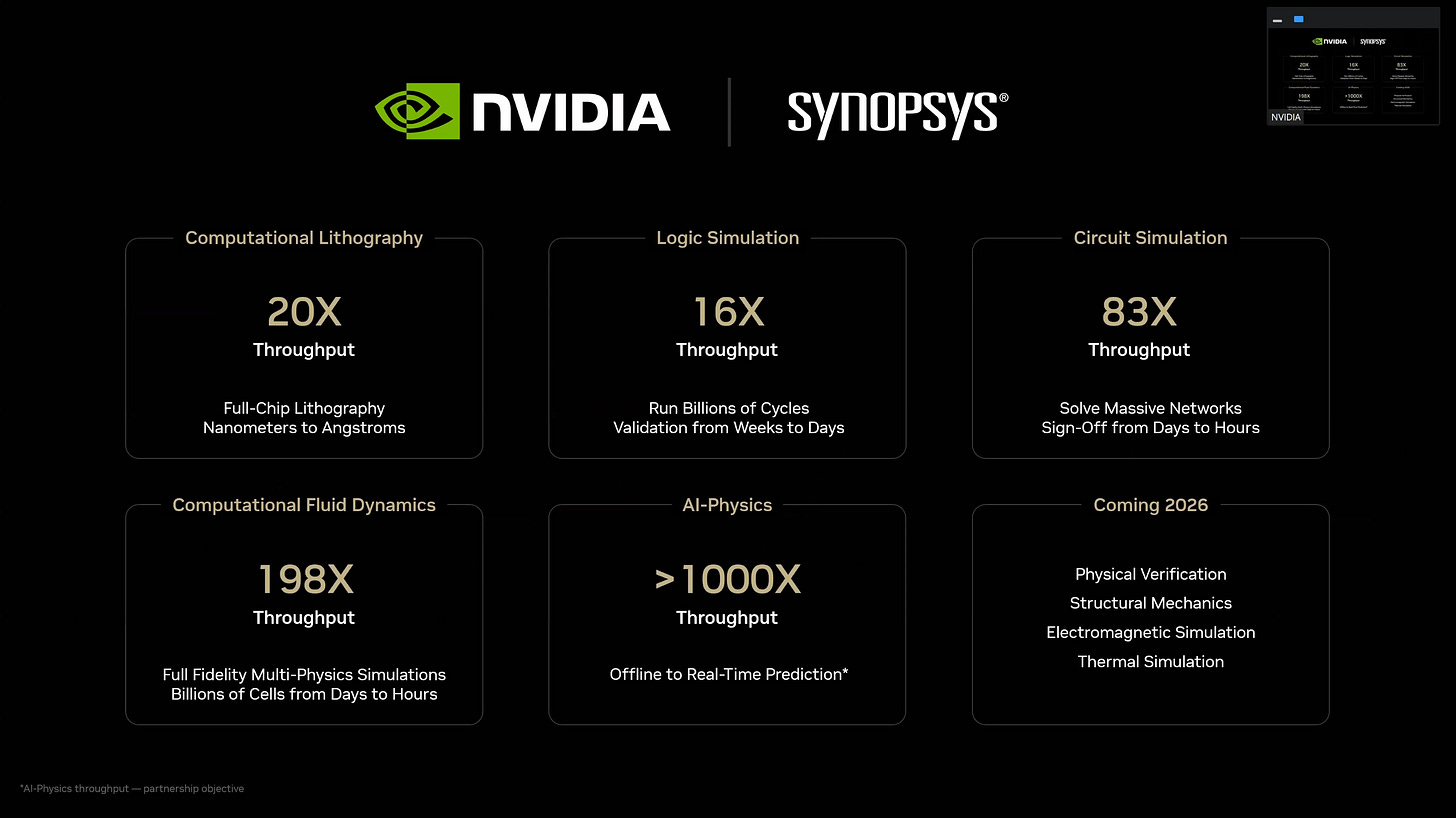

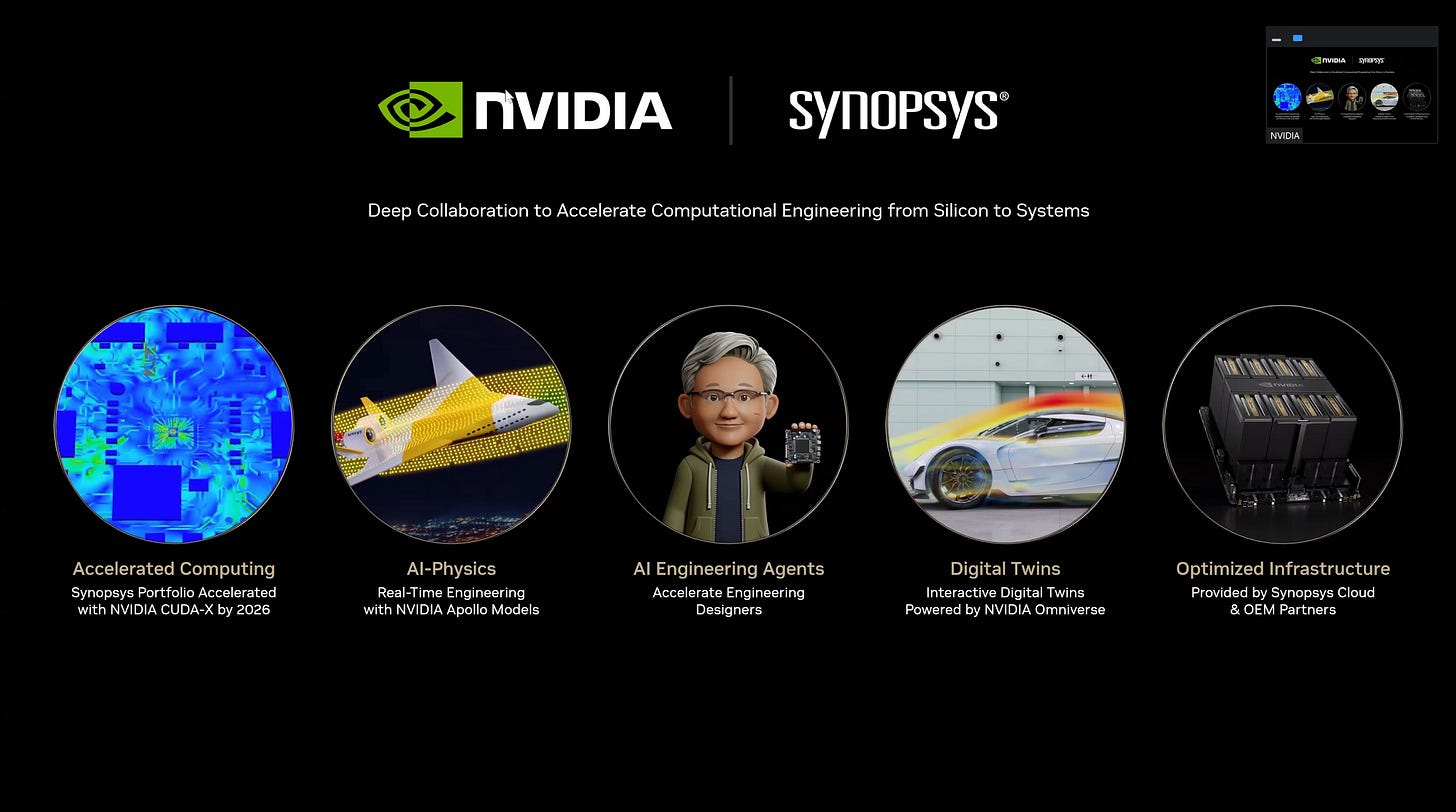

That context brings us to today’s announcement. NVDA 0.00%↑ and SNPS 0.00%↑ revealed that they are entering a broad multi-year partnership to accelerate a large portion of Synopsys’ EDA, simulation & multiphysics portfolio using NVIDIA portfolio. This means mostly GPUs and using AI models and digital twin platforms to accelerate Synopsys’ portfolio above and beyond the current implementation, especially in areas that have been CPU dominated since the advent of chip design.

To reinforce that alignment, NVIDIA is also making a two billion dollar investment into Synopsys’ common stock at $414.79 per share as part of the agreement.

On the surface this looks like a standard collaboration between a compute vendor (NVIDIA) and a software vendor (Synopsys), but the implied scope from the details is much larger. The engagement is to link together compute acceleration, AI assisted engineering, digital twins and solver reformulation in joint strategy to accelerate Synopsys’ go-to-market strategy in a way that spans everything from transistor level design to complete physical products across all of engineering. I mean all of it.

For readers outside the EDA world, this is unusual. Partnerships in this sector are normally narrow; related to one tool, one workflow, one integration. Here the companies are attempting something a bit more than that, which is perhaps a product of the current market. Synopsys has said it will lead the charge, accelerate its chip design, physical verification, optical simulation, molecular modelling, mechanical analysis & electromagnetic tools, using NVIDIA tools. NVIDIA will collaborate to provide optimized CUDA, AI frameworks, NeMo agents, NIM microservices & Omniverse support to Synopsys’ portfolio. The combined strategy, claim the companies, will allow customers to run larger or quicker simulations, tighten the time between iteration loops, and begin replacing some physical prototyping with virtual environments where it makes sense.

As part of the announcement, there was a CEO Q&A session for press and analysts from Jensen Huang and Sassine Ghazi. The press release was a little light on explicit detail, so the following topics were top of mind for the attendees:

Moving safety critical workloads onto GPUs that were historically done on CPUs.

The role of AI surrogate models in the engineering loop

The nature of the financial stake through share issuance, and exclusivity

How to reformulate solvers that were originally designed for CPUs

What this means for customers who rely on heterogeneous compute

I also had a separate Q&A session with Ravi Subramanian (Synopsys) and Tim Costa (NVIDIA) to ask some additional follow ups.

Jensen set the tone in his prepared remarks, noting that “CUDA GPU accelerated computing is revolutionizing design, enabling simulation at unprecedented speed and scale, from atoms to transistors, from chips to complete systems, creating fully functional digital twins inside the computer.”

Sassine framed Synopsys’ role as the software layer that can bring these capabilities into actual engineering workflows, telling the call that “no two companies are better positioned to deliver AI powered holistic system design solutions than Synopsys and NVIDIA.”

Unfortunately due to NVIDIA’s limitations on transcriptions requiring in-advance written permission, I can’t provide the Q&A word-for-word here. But I can cover the key topics and questions (and answers).

Questions from the announcement

(and some answers)

The scale of technical work ahead

A clear question raised in both Q&A sessions was how much of Synopsys’ portfolio is already GPU native, and how much still requires deep rewriting. The CEOs acknowledged that several workloads already use GPUs, but that the largest simulation time reductions come only after algorithms are reformulated. Jensen noted that “twenty plus” applications have some level of acceleration today, but that the multi physics and electromagnetic workflows require more structural change before they deliver the expected uplift. The companies did not provide dates, but the cadence implied a multi year migration through 2026 and 2027.

This sits on top of work the two companies have already started. Earlier this year at DAC, Synopsys and NVIDIA announced GPU acceleration for computational lithography, including optical proximity correction, resist modelling, and mask synthesis. At the time TSMC was listed as a primary partner, improving simulation times by an order of magnitude. Feedback was that meaningful gains require deep changes to legacy solvers, not just a CUDA port. The same applies to physical verification and parasitic extraction, where Synopsys has already shown early GPU paths for specific modules but still relies on CPU based flows for full scale signoff.

“We have been collaborating in GPU accelerating certain Synopsys applications. We have the leading number of EDA applications, over 20 GPU accelerated applications. The opportunity and what this collaboration is about is really turbocharging the way we do what we do.” - Sassine Ghazi

The practical reading here is that the most advanced flows will continue to run on CPU with a migration to GPU-accelerated configurations for some time. The angle of AI is also a point here, as most of the previous announcements were compute only. Part of the collaboration will also help Synopsys accelerate its AI stack and how that’s implemented into solvers, simulators, and other elements like digital twins. It won’t be an overnight task, but expect updates over the coming years.

The fidelity gap and double precision constraints

A recurring theme was the tension between AI friendly lower precision compute and the higher accuracy and fidelity demands of certain engineering domains that need 64-bit floating point compute. NVIDIA’s latest hardware, Blackwell, has been criticized for not amplifying 64-bit compute in line with previous generations in favor of AI quantized formats, and so the performance/power/cost tradeoff generation on generation hasn’t followed historical trends.

There are a number of engineering fields that rely on typical HPC works like fluid dynamics, finite element simulation, chemical simulation - for example aerospace, automotive, R&D, and industrial safety. These often depend on double precision solvers for accuracy and tightly validated physics models. Both CEOs acknowledged that many flows cannot simply be downshifted in precision without revalidation.

Their answer was two part. Some algorithms can be mathematically reformulated to run in mixed precision without loss of accuracy. There are some algorithms that will remain FP64 entirely, but the expectation is that those will, over time, use surrogate AI models are mature enough to cover parts of the pipeline.

This is an area where neither company attempted overreach. They did not claim that all workloads can be moved, or that AI models can replace physics solvers outright. But the timeline for adoption of lower precision remains an open question. One area in the smaller Q&A session I had was the context of safety critical compute, and ultimately some workloads will remain hybrid in nature.

The funny thing is in all this, I recently came back from the Supercomputing 2025 trade show a couple of weeks ago. During that event, we had a TOP500 press briefing, where the organizers of the TOP500 supercomputer lists give updates on the new rankings. The benchmark at play there is LINPACK, which is an FP64 workload. Professor Jack Dongarra, the floating saint of the TOP500, was asked if he worried about these lower precision formats becoming a mainstay in HPC workloads. He responded with something deceptively simple.

“If I get the right answer, I don’t care.” Prof. Jack Dongarra, TOP500 Co-Founder

Having an extensive holistic discussion about floating point formats in HPC is an article for another time.

The TAM opportunity

A natural follow on question was why the companies were positioning this partnership as something that unlocks new TAM opportunities. That wording implies a larger commercial ambition, and suggests the companies believe the available market for simulation and modelling could expand if the cost and speed barriers are reduced. This matters because, even though we usually think about Synopsys just as an EDA company, it did recently acquire Ansys, a multiphysics simulation tool provider. Multiphysics simulation is not a semiconductor problem; it exists across every engineered system, from mechanical structures to fluids, materials, electromagnetics and robotics. Most industries rely on physics solvers, and funnily enough they look structurally similar to the ones used in chip development.

It was explained to me that the bottleneck is that most of those industries still depend heavily on physical prototypes and hardware testing. When simulation is slow or expensive, companies limit how much they do, which constrains how much design space they can explore. Also at a certain level, physical prototyping is tangible, and comfortable. The idea is that if accelerated compute makes large scale simulation cheaper and easier to deploy, those industries could move far more of their engineering effort into the virtual domain.

As a chemist, the idea is akin to simulating a thousand chemical targets, then only taking the dozen most appealing to actual physical testing. A phrase I’ve used in the past is ‘fail fast, fail often’, however both companies also framed it as an amplification tool as a well as time-to-market enhancement. That shift increases the total amount of computation consumed, not just the performance of individual tools, and so the TCO has to also be there.

Ravi from Synopsys described this difference directly in our follow up session. “In semiconductors it’s about 14 to 15 percent of revenue. Most other industries are 2 to 4 percent because they depend so much on physical prototypes and testing. If you make simulation cheaper and faster, you can shift a lot of that work into the virtual domain. That is a huge opportunity.”

Neither company put an exact number on this shift, and both avoided any forward TAM sizing. But the implication is that if even a modest portion of aerospace, automotive, energy or industrial engineering begins adopting virtual first workflows, the aggregate demand for accelerated simulation could grow significantly. As the lead, Synopsys gains revenue, and as an investor and hardware supplier, NVIDIA grows too.

How this will reach customers

One question that came up repeatedly was how these accelerated workflows will actually make their way into customer environments. The press release mentioned joint cloud-ready offerings, and alignment across enterprise accounts, but did not go into detail on how Synopsys intends to package or deliver the accelerated versions of its tools. This matters because GPU accelerated simulation behaves more like a compute workload than a traditional per seat EDA license, and different industries have very different software procurement models.

Synopsys emphasised that it will lead the commercial motion, leveraging its existing relationships across semiconductors, industrial engineering, automotive, aerospace and energy. That reach is notably wider than NVIDIA’s historical distribution, particularly in areas where engineering teams adopt tools through long standing enterprise agreements rather than through cloud marketplaces. That might change in the future, but is still where we operate today. The collaboration effectively pairs Synopsys’ account depth with NVIDIA’s hardware, CUDA libraries, AI stacks and digital twin platforms, but the companies stopped short of describing how the combined flows will be priced or consumed, if on-prem, through hyperscaler cloud support, or through Synopsys’ own cloud. Probably a little of all of them.

On top of that, because we’re still a bit far down the road on some of this, there were no specifics on whether customers should expect perpetual licensing, usage based consumption, hybrid bundles or cloud metered models. For teams that already run large on premises clusters, this lack of detail leaves important questions about budgeting and deployment. NVIDIA’s messaging, perhaps as expected, suggested that Blackwell class systems will be a natural fit for these workloads, while Synopsys indicated that cloud deployment will be a key path for customers without access to high density accelerated compute. In the follow up session, Tim from NVIDIA framed the cloud angle as part of a longer term shift toward scalable, on demand simulation, but did not commit to any particular consumption model.

The neutrality question

Another thread was whether NVIDIA’s two billion dollar equity investment places Synopsys on a trajectory that implicitly favours NVIDIA hardware over competing accelerators. Both companies were explicit that the partnership is not exclusive. Synopsys stated that its tools will continue to support CPUs and other environments. Jensen also reinforced that customers will be free to choose the hardware that fits their workloads.

But the open question is not contractual neutrality but practical neutrality. Reformulating a solver for CUDA is not a surface level optimisation - It requires deep engineering, validation and ongoing refinement as hardware generations evolve. Once Synopsys invests that effort for NVIDIA’s platform, it is reasonable for customers to ask how equivalent the performance and feature maturity will be on other hardware, especially given the nature of CUDA and cross compatibility. We didn’t expect the CEOs to offer specifics on how parity across architectures would be maintained, as for sure it’s in NVIDIA’s best interests to try and show the best performance on its own.

In the follow up session, Synopsys reiterated that its software remains architecture portable, emphasizing that it has historically ported tools to x86, ARM and custom hyperscaler hardware whenever customers required it. At the same time, NVIDIA stressed that acceleration is not a matter of vendor preference but of computational structure, noting that many solvers only deliver their full uplifts once algorithms are reformulated to exploit GPU parallelism and memory bandwidth. Taken together, the implication is that heterogeneous environments will continue for some time, and that teams running large mixed clusters will have to watch how solver performance and feature maturity evolve as more of the stack is rewritten for accelerated paths.

Where AI fits into the engineering loop

One of the more speculative but important areas of discussion was how AI will be incorporated into engineering workflows. The press release referenced ‘agentic AI engineering’ and highlighted NVIDIA’s NeMo agents, NIM microservices and broader model ecosystem as part of the collaboration. That naturally led to questions about how far these AI systems will sit inside the simulation loop, and what parts of the pipeline they are expected to influence.

Both CEOs were cautious in describing where AI fits into engineering.

Jensen framed it as an assistive layer rather than a replacement for physics, emphasising that many solvers demand accuracy levels only validated numerical methods can provide. He added that AI could emulate narrowly defined pieces of a workflow, noting that ‘AI could be used to emulate the physics, so some amount of the simulation could be accelerated through surrogate models’, but only in places where engineers can validate the behaviour.

Sassine’s comments in the main call and in my follow up reinforced this framing, focusing on AI’s usefulness in exploring design spaces, generating boundary conditions, analysing logs and automating repetitive setup tasks. Synopsys also highlighted the need to preserve correctness across intermediate results, especially in flows that feed into foundry or safety requirements. Taken together, the message was that AI can shorten loops and reduce manual effort, but physics based solvers remain the backbone of any production workflow.

Analyst Thoughts

There is a long history of technological optimism in engineering simulation. Every decade comes with the promise of virtual prototyping at scale, and every decade the bottleneck has been compute, fidelity, or both. What got me personally into Chemistry was the fact that at the time, the ability to simulate chemical interactions was nascent and I remember in my university interviews highlighting it as a key differentiator for the future of chemical development.

What is different now is that the hardware we can use, and how it is used. The prevalence of AI models is such that the hardware stack is changing, but also the design software stack is being built to align with it at the same time. This means that any two companies with substantial influence can commit to long term engineering resources to improve that alignment.

From NVIDIA’s perspective, this is a bet on a new class of compute demand beyond AI inference and AI training. The company already serves hyperscalers and AI startups. The next growth frontier lies in industries that produce physical products and run complex simulations. NVIDIA already highlights physical AI, robotics and such, but something in-between agentic and robotic compute is the physical design industry. Synopsys (and Ansys), with its decades of experience, becomes the route into that market.

From Synopsys’ perspective, this is a chance to lead the next shift in tool capability. EDA has been evolving through algorithmic improvements, parallelism and incremental efficiency gains, but a move into accelerated compute and AI driven engineering allows the company to open new product categories and reframe existing ones. Amplifying multiphysics like FEM, which has been a mainstay on CPUs for decades, is a golden opportunity.

The partnership does not resolve every technical question. It does not answer how far AI surrogate models can truly go. It does not describe how every solver will be ported. It does not address how pricing will change as simulation becomes a compute heavy workload. It does not settle the competitive landscape for heterogeneous compute. The companies were asked how this agreement goes above and beyond a simple collaboration, and the key answer there is go-to-market with engineering design tools.

What we’re going to end up seeing is a commitment to push simulation, verification and digital twins into a form that is only possible with large scale accelerated compute and AI, predominantly built on NVIDIA’s accelerated hardware and software. The two billion dollar investment feels like simply a financial detail to help NVIDIA support its ecosystem, but also try and uplift it while the commercial opportunity is good. What will be interesting to see is if other companies make similar statements to go after similar markets, or if this lands different compared to the status quo with the planned business model.

We’re expecting to hear more about the fruits of this engagement in 2026. Keep an eye out for Synopsys Executive Forum (March 11-12, Santa Clara), GTC (March 16-19, San Jose), and DAC (July 26-29, Long Beach).

More Than Moore, as with other research and analyst firms, provides or has provided paid research, analysis, advising, or consulting to many high-tech companies in the industry, which may include advertising on the More Than Moore newsletter or TechTechPotato YouTube channel and related social media. The companies that fall under this banner include AMD, Applied Materials, Arm, Armari, ASM, Ayar Labs, Baidu, Bolt Graphics, Dialectica, Facebook, GLG, Guidepoint, IBM, Impala, Infineon, Intel, Kuehne+Nagel, Lattice Semi, Linode, MediaTek, NeuReality, NextSilicon, NordPass, NVIDIA, ProteanTecs, Qualcomm, Recogni, SiFive, SIG, SiTime, Supermicro, Synopsys, Tenstorrent, Third Bridge, TSMC, Untether AI, Ventana Micro.

Original Press Release: https://news.synopsys.com/2025-12-01-NVIDIA-and-Synopsys-Announce-Strategic-Partnership-to-Revolutionize-Engineering-and-Design

Love this!