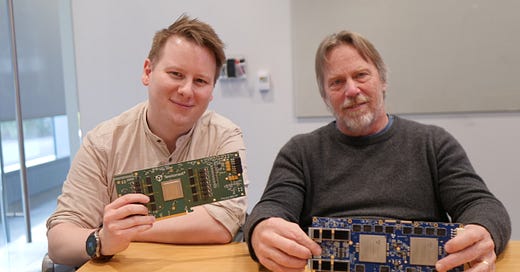

I covered the Tenstorrent CEO/CTO switch a couple of posts back, and in my recent trip to SF I managed to get some time with Jim at HQ. He was flying between what seemed like a number of technical and customer meetings. Last time I met Jim in person was almost six years ago at an Intel architecture event, and that was a group interview so we didn’t have…

© 2025 Dr. Ian Cutress

Substack is the home for great culture