AMD and OpenAI: The 6 Gigawatt Bet

Redefining AI Competitive Economics

AMD 0.00%↑ was up 35% in early trading on this news, which released 8am ET on October 6th 2025.

AMD and OpenAI today announced a plan for OpenAI to purchase and deploy (through affiliates) up to six gigawatts of AMD Instinct GPUs over multiple hardware generations, beginning with the MI450 series in the second half of 2026, with a potential sales value of $90 billion USD. In exchange, AMD has issued OpenAI a warrant to purchase up to 160 million AMD shares at $0.01 per share - shares that vest progressively as OpenAI’s deployment scales from one to six gigawatts and as AMD’s share price crosses a series of performance thresholds up to $600 per share.

For those doing the mathematics, if OpenAI were to sell all of it shares at $600 per share, with 160m shares would be $96 billion dollars, or roughly the same value as the hardware in the deal. (This sentence has been edited to clarify it as a share sales statement, not as a purchase statement, given some confusion.)

AMD’s announcement of a strategic partnership with OpenAI represents not just another GPU supply agreement, but a structural reconfiguration of how AI infrastructure is financed and aligned. On the surface, this is a supply deal. Underneath it could be considered a financial instrument that transforms AI hardware sales into equity alignment, tying AMD’s long-term valuation directly to OpenAI’s infrastructure growth. AMD describes the partnership as “expected to deliver tens of billions of dollars in revenue,” and its stock surged more than thirty percent following the announcement. The market clearly sees this as a forward acceleration of AMD’s ambition to reach ten billion dollars in annual AI revenue earlier than expected.

This deal also arrives amid a broader shift in AI infrastructure economics. Intel has recently secured capital commitments from NVIDIA, SoftBank, and the U.S. government to co-finance its foundry expansion, a move that blurs the line between supplier, competitor, and investor. NVIDIA’s own Stargate partnership with OpenAI, Oracle. and others, is now presenting as more than five hundred billion dollars in collective investment, and has transformed GPU procurement into long-term capital alignment. The common thread is that compute capacity is no longer a simple capital expense; it is becoming a financial asset class. In that context, AMD’s OpenAI partnership fits a growing trend: AI compute is no longer being bought and sold in discrete transactions - it’s being financed, securitized, and capitalized at ecosystem scale.

The Announcement

The press release states that OpenAI will first purchase and deploy one-gigawatt deployment of AMD Instinct MI450 GPUs, which will begin ramping in the second half of 2026. This will vest a certain amount of equity, and subsequent tranches of equity will vest toward a six-gigawatt ceiling across future AMD GPU generations. Both companies frame the deal as a “multi-year, multi-generation” partnership encompassing hardware, software, and rack-scale system co-engineering.

AMD CEO Dr. Lisa Su positioned the partnership as a “win-win” that advances the broader AI ecosystem, while CFO Jean Hu characterized it as both strategically accretive and earnings-positive over time. For OpenAI, CEO Sam Altman emphasized the need for “massive compute capacity” to realize AI’s potential, signaling that this partnership is not just about supply security but about scaling beyond NVIDIA’s ecosystem constraints.

Inside the Deal

AMD’s 8-K filing reveals how the equity component transforms this agreement into one of the most unusual customer-supplier alignments in semiconductor history. The nominal strike price makes the warrant functionally equivalent to performance-based equity without requiring AMD to surrender governance control or board representation. It acts as a symbiotic partnership, rewarding OpenAI only if it drives measurable, revenue-generating demand for both OpenAI and AMD.

Vesting follows a dual-track structure. The first tranche, tied to the initial one-gigawatt MI450 deployment, becomes guaranteed once hardware is delivered and accepted. Additional tranches unlock as cumulative GPU purchases scale toward six gigawatts and as AMD’s share price crosses sequential thresholds that rise from the current $165 baseline ($225 peak as market opened) to as high as $600. The design ties reward to both operational delivery and market recognition, requiring OpenAI to help AMD grow its datacenter business to a level that compels investor repricing.

The warrant’s duration runs to October 2030. Any unvested shares are automatically cancelled if AMD terminates the GPU supply agreement for cause. All vested but unexercised shares automatically convert at expiry, giving OpenAI a fixed window for liquidity while preventing long-term overhang.

OpenAI’s registration rights extend through 2033, allowing it to sell vested shares in minimum blocks of one hundred million dollars. This avoids small-scale sales that could create volatility and ensures any disposition attracts institutional investors. AMD also retains anti-dilution and transfer restrictions, preventing OpenAI from reselling or transferring the warrant except to affiliates.

Financially, the structure achieves several objectives. It shifts AMD’s customer-acquisition cost into equity rather than up-front discounting, limits dilution to realized performance, and guarantees at least one gigawatt of Instinct shipments under defined terms. It also creates a self-funding pathway for OpenAI. As AMD’s share price rises with each milestone, the warrant’s paper value could help finance later GPU purchases, reducing the need for direct capital outlay.

The approach contrasts with NVIDIA’s capital partnerships and Intel’s foundry financing model. Oracle’s Stargate ecosystem and NVIDIA/OpenAI collaboration rely on direct co-investment, locking hyperscalers into vertically integrated infrastructure through large-scale capital deployment. Intel’s recent fundraising from NVIDIA, SoftBank, and the U.S. government involves joint participation in manufacturing capacity, effectively merging supplier and investor roles. AMD’s structure differs in that it keeps the core transaction commercial, with OpenAI still purchasing hardware outright, but overlays an equity-linked incentive to expand that consumption over time. Instead of financing its buildout through external capital contributions, AMD is converting future sales performance into a share-based reward system, allowing OpenAI to benefit financially from the success of the very supply it consumes.

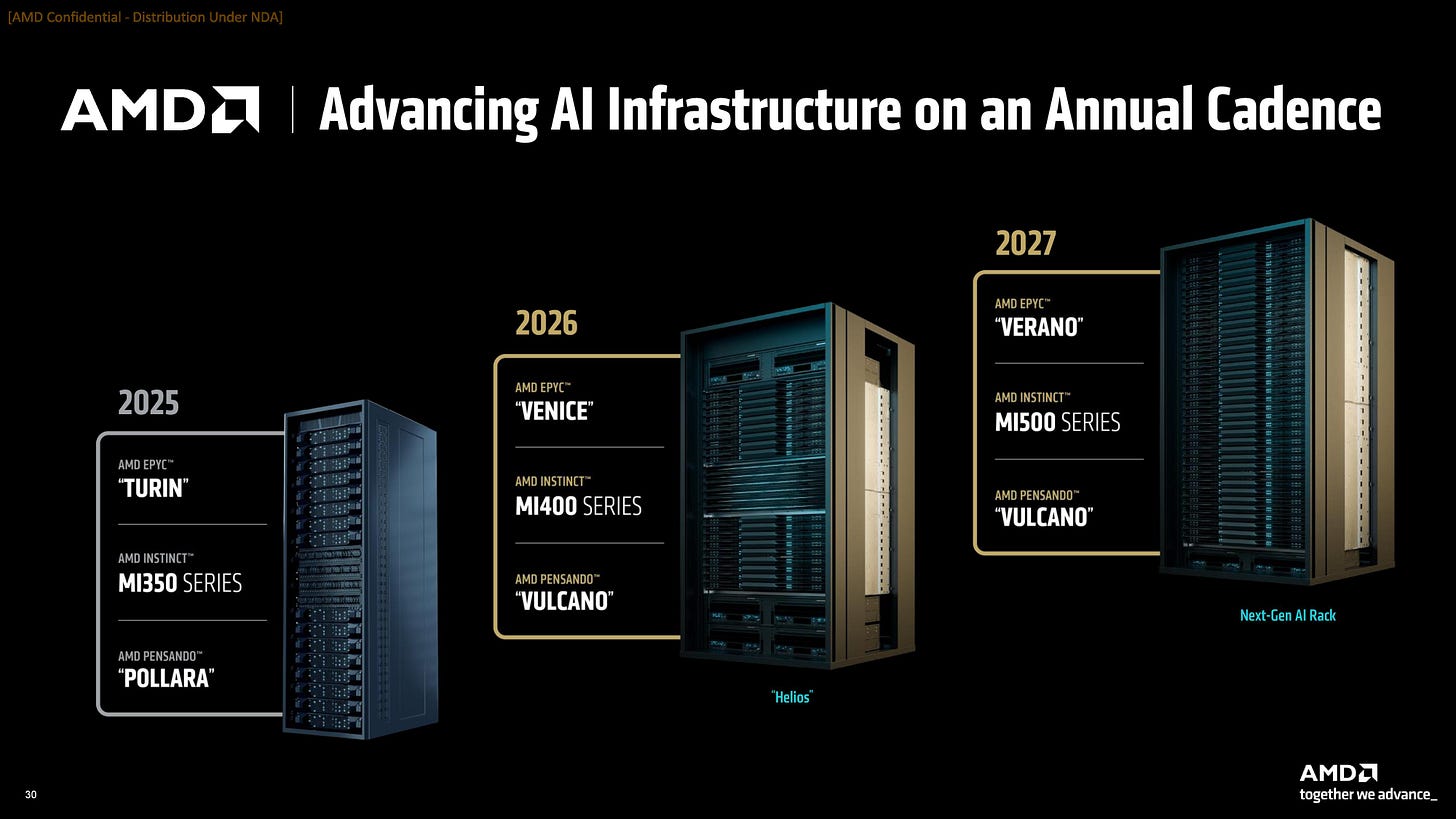

AMD’s Roadmap

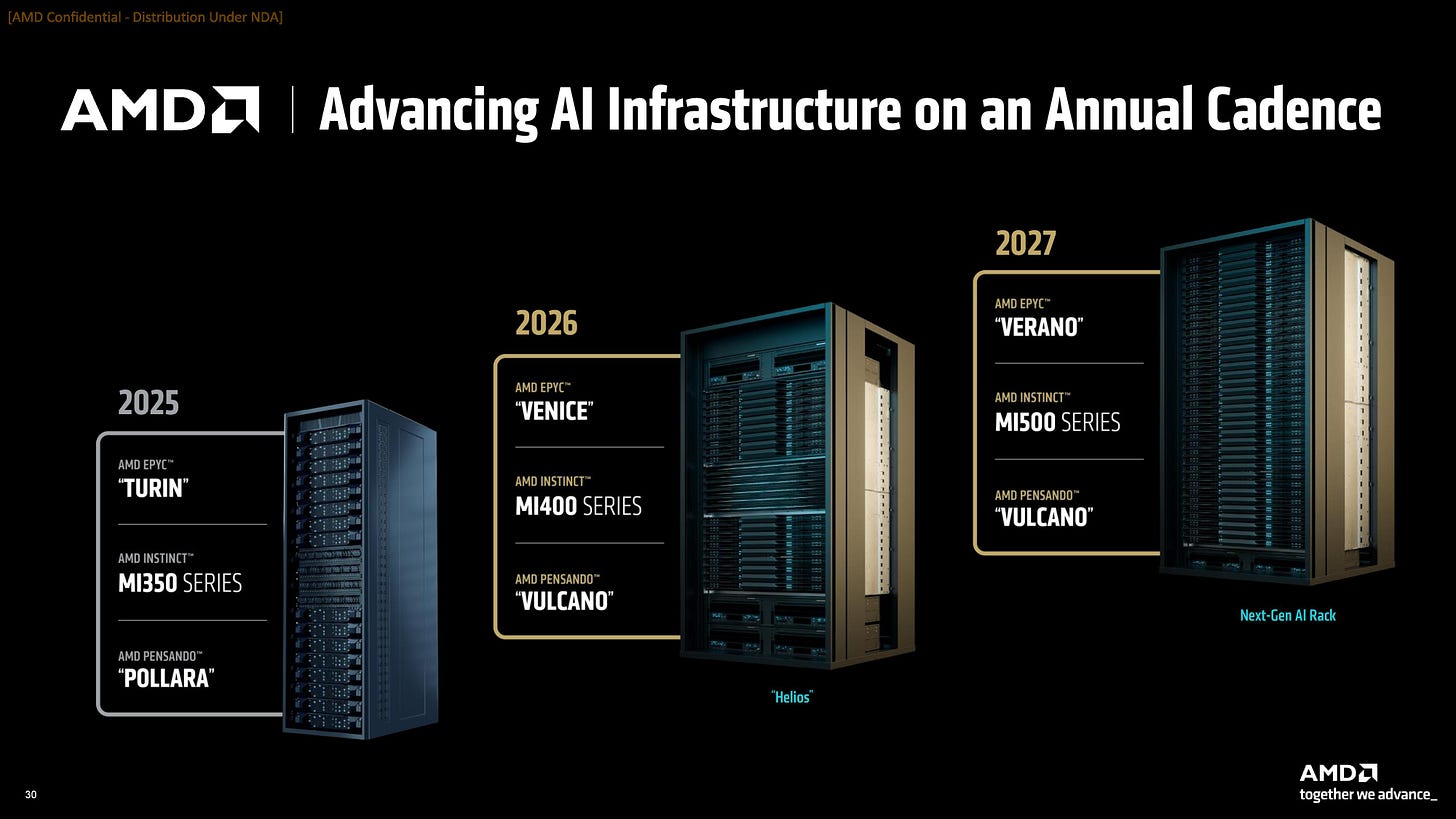

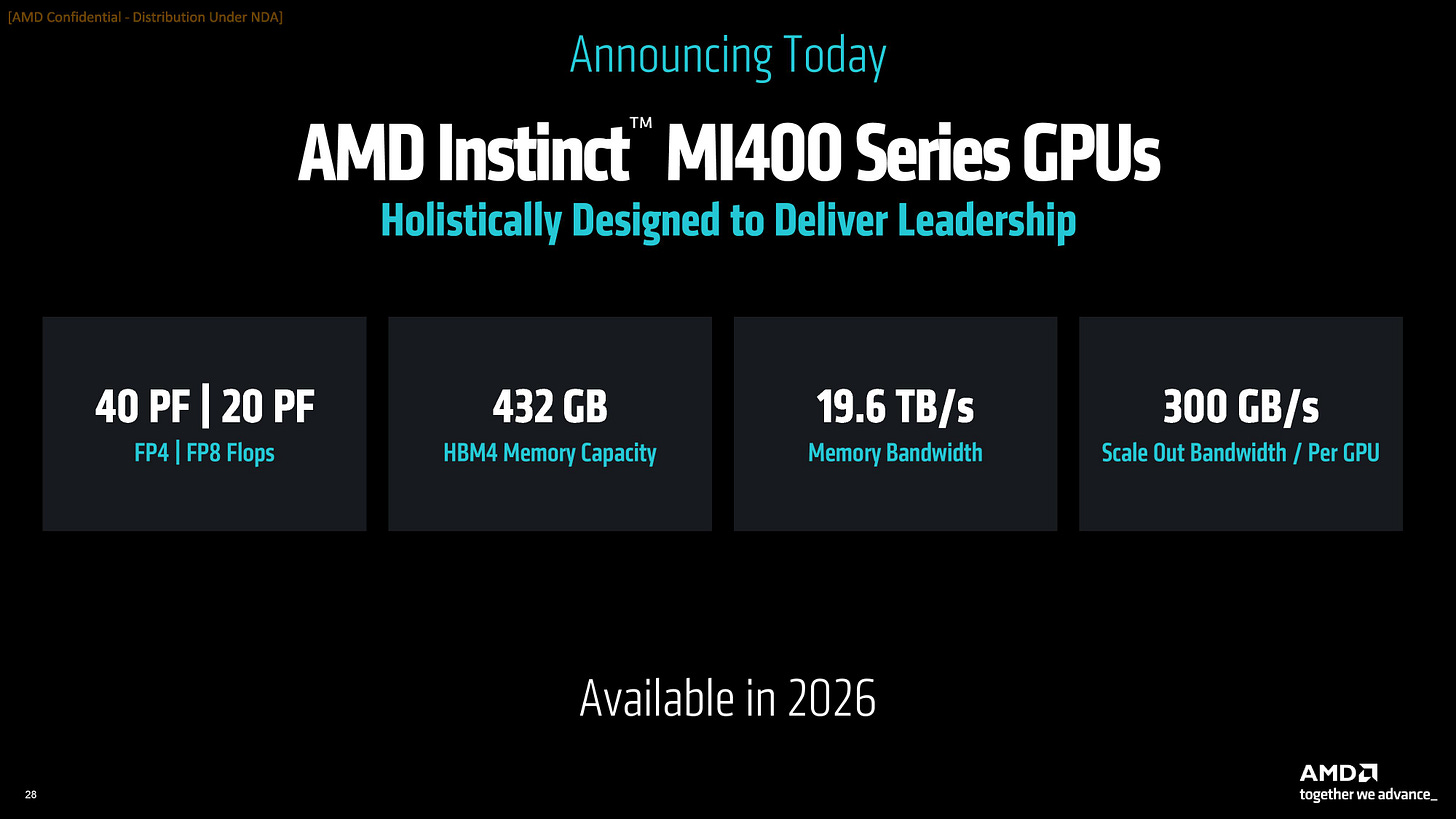

At the heart of this partnership lies AMD’s next major datacenter GPU generation: the Instinct MI450. Built on the CDNA4 architecture, MI450 is expected to be the company’s first product line optimized for true rack-scale deployment, integrating compute, interconnect, and memory bandwidth at system level rather than device level. AMD’s Helios platform, its new reference architecture for large AI clusters, will combine MI450 GPUs with Zen 6-based CPUs, high-bandwidth memory, and advanced interconnect fabric into pre-engineered rack-scale systems designed to compete directly with NVIDIA’s HGX and DGX SuperPODs.

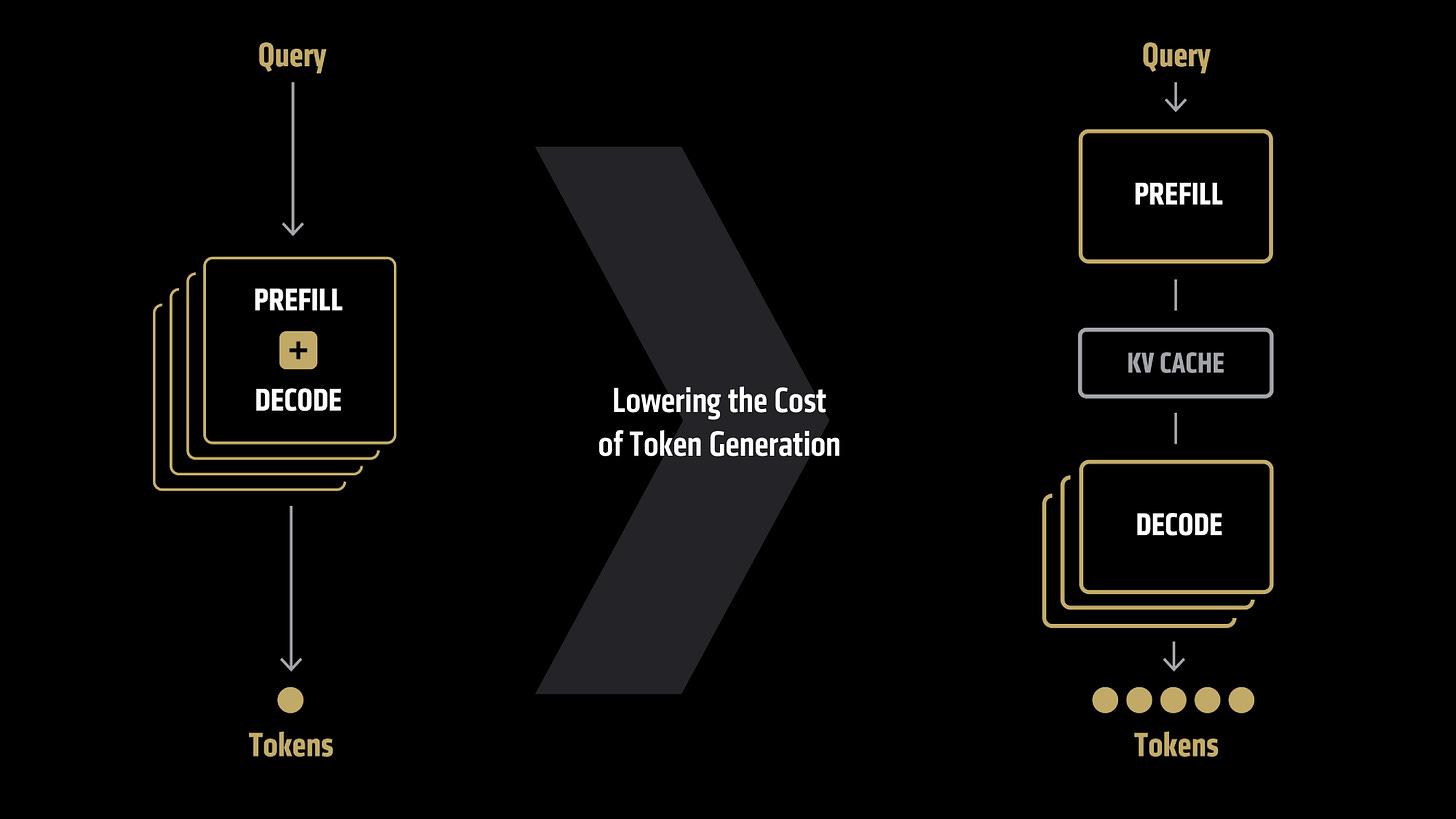

Helios is not a standalone server but a full datacenter block. By selling at rack granularity, AMD can control system topology, software tuning, and cooling integration, allowing ROCm, its open software stack, to scale across thousands of nodes with predictable efficiency. The MI450 ramp in 2H26 positions AMD to meet OpenAI’s first one-gigawatt commitment within the launch window of its next-generation CDNA platform.

Beyond that, AMD’s roadmap transitions to the MI500 family, which is expected to build on the same system design philosophy while introducing higher bandwidth memory and tighter integration of compute and fabric. By the time OpenAI approaches the later tranches of its six-gigawatt target, AMD will likely be shipping MI500-based systems, meaning that OpenAI’s volume commitments serve as an anchor for AMD’s next architectural generation as well.

Economic Scale and Translation

Six gigawatts of GPU compute equates to an enormous scale in semiconductor terms. If AMD’s MI450 delivers roughly the same thermal envelope per accelerator as current high-end GPUs - around one-to-two kilowatt per chip - a six-gigawatt deployment implies roughly three-to-six million devices. At an estimated blended average selling price of $24,000 to $30,000 per unit, this corresponds to $90 billion in cumulative hardware revenue potential over the lifetime of the agreement. Even assuming lower utilization or phased adoption, AMD’s projection of “tens of billions” aligns with a reasonable revenue distribution across multiple product generations.

The financial risk is counterbalanced by equity dilution. The market appears comfortable with that tradeoff, interpreting it as performance-based rather than speculative dilution.

OpenAI’s Position

For OpenAI, this structure solves several strategic problems at once. It secures guaranteed access to AMD hardware in a supply-constrained market, diversifies beyond NVIDIA, and provides financial leverage for future infrastructure scaling. The warrant effectively grants OpenAI an embedded financial return on its own hardware purchases. As it meets deployment milestones and AMD’s stock rises, OpenAI’s vested shares appreciate, creating liquidity that can be used to fund additional compute expansion.

This arrangement gives OpenAI a partial hedge against the capital intensity of AI infrastructure, but it also introduces dependency. The warrants are only valuable if AMD’s stock performs and if OpenAI actually deploys at the intended scale. Missing milestones or failing to monetize GPU capacity could strand value. It also raises governance questions: OpenAI now has a direct financial incentive to promote AMD’s hardware ecosystem, potentially influencing supplier neutrality across its deployments and partnerships.

Industry Context: Competing Ecosystems

The AMD–OpenAI partnership lands within an environment still dominated by NVIDIA’s gravitational pull. OpenAI’s own Stargate initiative, co-financed by Microsoft and Oracle, represents one of the largest single commitments to NVIDIA hardware ever assembled, with roughly five hundred billion dollars of projected infrastructure investment. That ecosystem has effectively bound hyperscalers, model developers, and datacenter operators to NVIDIA’s vertically integrated stack, where the company controls not only GPU architecture but also interconnect, system topology, and software frameworks.

AMD’s approach diverges in structure and philosophy. By embedding equity incentives instead of demanding co-investment, AMD creates a partnership model that rewards consumption rather than exclusivity. Rather than concentrating ecosystem power within one supplier’s vertically integrated roadmap, AMD is building a distributed network of strategic buyers who participate in its upside. This decentralizes the hyperscaler dynamic, turning AMD’s compute roadmap into a shared platform rather than a single-vendor dependency.

In practice, this structure could attract AI developers and smaller cloud providers that lack the capital depth of Stargate yet still seek long-term alignment with a semiconductor partner. It also gives AMD a way to convert prospective customers into aligned stakeholders without the balance-sheet entanglement that NVIDIA’s large-scale ecosystem agreements require.

Limitations and Caveats

The agreement’s redactions highlight where the risk lies. AMD withheld the detailed vesting schedule (Exhibit E) and exercise conditions (Exhibit F) from its public 8-K filing, meaning the precise timing, tranching, and technical triggers remain confidential. That opacity makes it impossible to model when revenue recognition aligns with share vesting.

The six-gigawatt figure should also be viewed as a maximum, not a guarantee. OpenAI’s binding commitment currently extends only to the first one gigawatt of MI450 deployment. The remainder depends on future purchases and performance thresholds. In effect, the warrant incentivizes OpenAI to reach those levels, but does not obligate it.

From AMD’s perspective, the main operational risk is execution. Delivering six gigawatts of datacenter GPU capacity requires consistent foundry allocation, substrate availability, and high-bandwidth memory supply over multiple years. This was highlighted in the analyst call after the announcement, to which AMD cited its multi-year investment in supply chain stability. Any disruption in wafer starts or packaging capacity, particularly as AMD competes with NVIDIA and others for TSMC’s CoWoS capacity, could delay fulfillment.

Finally, the structure introduces market timing risk. The first delivery milestone is in late 2026, meaning most financial benefit arrives well after the initial stock price reaction. Between now and then, AMD must continue funding its datacenter R&D pipeline while balancing investor expectations for accelerated AI revenue growth.

Power and Placement

One of the key questions in any AI deployment today is power allocation and where the hardware has to be placed. The demands for power, especially in the US, have been sufficient to exercise extreme measures to get megawatts online. There are many other column inches dedicated to this, so I won’t rehash them here, but I would like to highlight a post by Guido Appenzeller, a16z, over on Linkedin.

In short:

NVIDIA made 6 GW of GPUs in 2025, or about 9 GW required after extras

In May 2025, China stood up 90 GW of solar.

The problem isn’t power generation. Solar in some parts of the world, nuclear or oil/gas in others. It’s all in power delivery, and it’s a market needing an uplift right now. Semianalysis has a number of good articles on that industry and how most of the power delivery hardware is reserved sold for many quarters to come. The implication for AMD and OpenAI is that even if silicon supply is secured, physical deployment remains constrained by transformer lead times, grid approvals, and substation buildouts that can stretch to multiple years.

As for the location of the AMD hardware, OpenAI has consistently preferred US-based location for its hardware partners. While nothing in the release or 8-K directly ties the hardware to the US, in the analyst call it was stated that the hardware would be consumed either directly or through OpenAI-linked subsidiaries. This could mean neo-clouds with substantial OpenAI contracts being used as part of this agreement.

Market Response and Interpretation

The immediate 33%+ after-hours surge in AMD’s stock following the announcement is unusually strong for a partnership disclosure, but that’s in part to the numbers involved and the OpenAI-can-do-no-wrong attitude. It reflects investor interpretation that this deal represents both validation and acceleration of AMD’s AI strategy.

For analysts, the market reaction also re-prices AMD’s datacenter division as a growth engine comparable to NVIDIA’s. Even accounting for dilution, the implied enterprise value uplift from six-gigawatt demand more than offsets the new share issuance. Investors appear to view the warrant as proof that AMD’s MI450 and MI500 architectures are credible alternatives capable of capturing high-value AI workloads at scale.

Final Thoughts

This partnership formalizes a shared trajectory between AMD and OpenAI, but it also exposes how much of AMD’s near-term AI narrative now depends on OpenAI’s ability to scale. The warrant is designed to reward real hardware consumption, not speculation, which means AMD’s roadmap execution is only half the story. The other half lies in whether OpenAI can translate massive compute deployment into profitable services and sustained utilization. If OpenAI slows or reprioritizes, the upper tranches of AMD’s warrant structure may never vest.

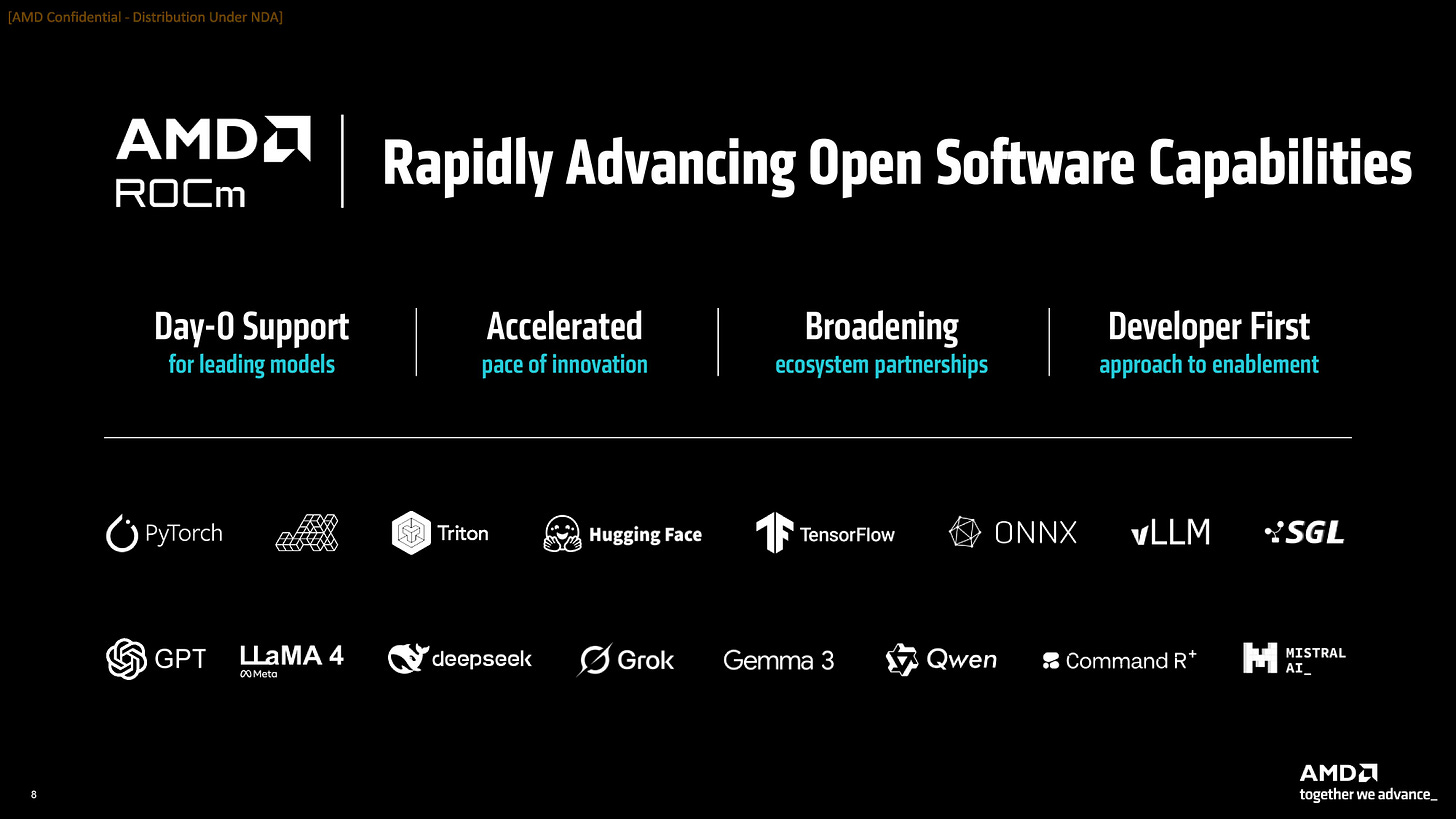

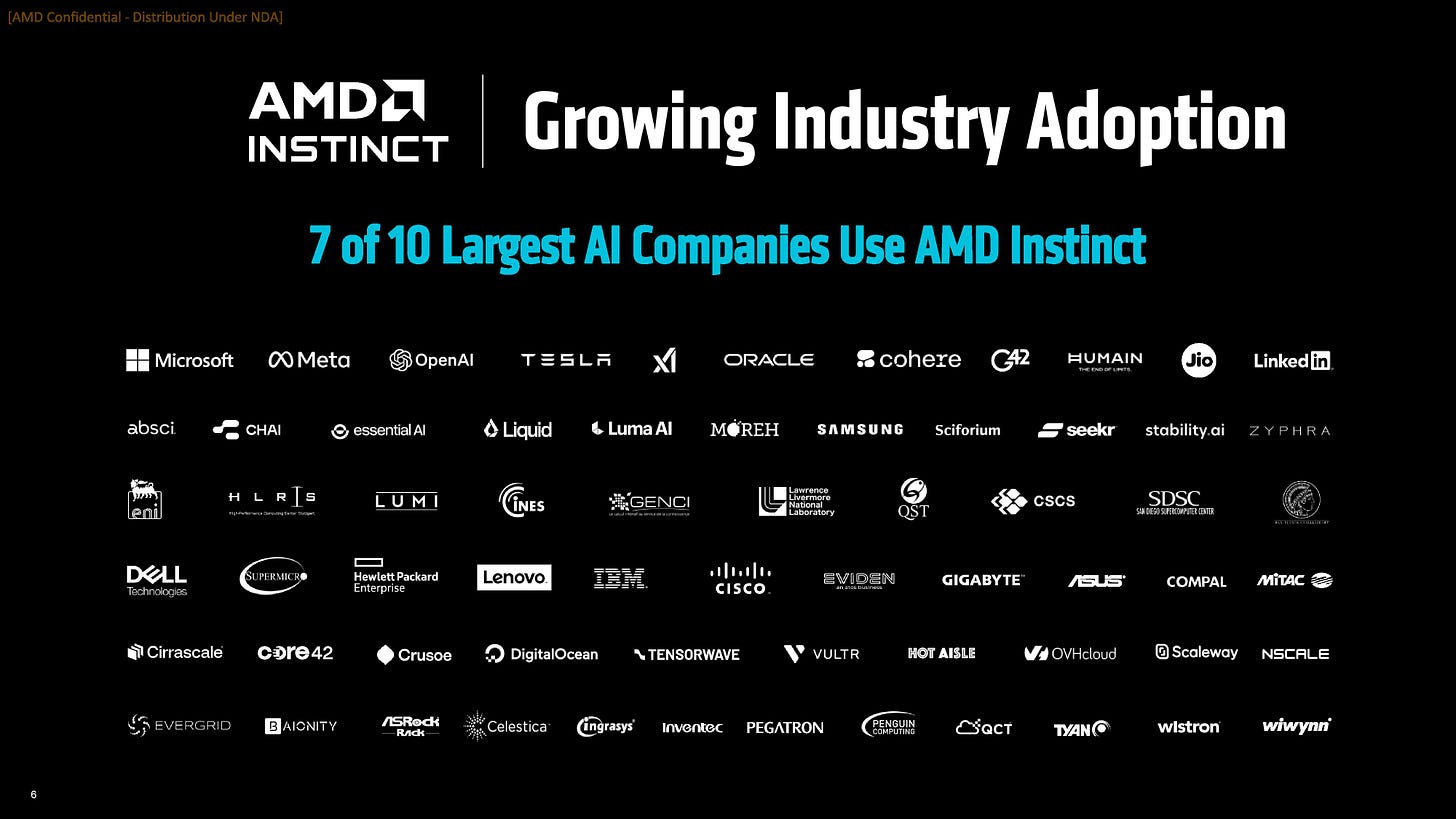

AMD has historically spread its datacenter business across a wide customer base -cloud providers, hyperscalers, enterprise, and HPC - rather than relying on a single anchor tenant. That diversification remains critical. Even if OpenAI drives the first gigawatt of MI450 deployments, AMD’s ability to extend ROCm adoption and platform compatibility will determine whether the MI450 and MI500 families reach beyond this initial engagement. ROCm’s maturity, tooling, and framework integration still lag NVIDIA’s CUDA stack in many developer workflows, though AMD has made measurable progress in large-model inference support and mixed-precision training optimizations.

AMD’s recent acquisition spree reinforces that ambition. Over the course of 2025, the company has made around ten strategic acquisitions to deepen its position across the AI value chain. These include ZT Systems, bringing rack-scale system design and integration directly under AMD’s control; Enosemi, expanding its footprint in co-packaged optics and high-speed interconnect; and a set of software and AI tooling firms such as Nod.ai, Silo AI, Untether AI, and Brium, each aimed at improving compiler efficiency, model optimization, and ROCm ecosystem readiness. Together, they give AMD vertical reach from silicon to system to software—turning it into a full-stack AI infrastructure company capable of delivering pre-engineered, datacenter-scale solutions. The OpenAI partnership will be the first true stress test of how cohesively those pieces operate at scale, and how effectively AMD can turn acquisition-led capability into real deployment velocity.

The OpenAI deal may also accelerate ROCm’s evolution. Working directly with one of the most demanding AI developers in the world provides AMD with both validation and feedback loops that could harden ROCm into a more production-ready ecosystem. If that happens, the benefits will extend beyond OpenAI, strengthening AMD’s position with other model developers and cloud partners that have struggled to integrate ROCm efficiently at scale.

There is also the question of platform breadth. AMD’s Helios systems integrate CPUs and GPUs at rack level, and a deep partnership with OpenAI could pull AMD’s Zen processors into more inference-heavy roles. OpenAI previously stated that AMD’s MI250X delivered roughly ten percent better inference performance in some workloads compared with NVIDIA alternatives. If that remains true across the MI450 generation, and if the Helios systems gain traction as unified CPU–GPU platforms, AMD may capture additional upside beyond the Instinct accelerators themselves.

What remains unclear is how far AMD is willing to tailor its platform strategy around OpenAI’s specific requirements. Dedicated enablement for OpenAI will yield immediate returns but may introduce subtle forms of lock-in or technical divergence that complicate broader customer adoption. Balancing custom enablement with ecosystem generality will be one of AMD’s most strategic decisions in the next two years.

Ultimately, this deal illustrates how the semiconductor business is shifting toward deep bilateral alignment between chipmakers and compute consumers. Supply agreements are becoming investment frameworks. GPU roadmaps are becoming shared infrastructure. And software ecosystems are being forged through the feedback loops of real deployment rather than theoretical optimization.

Whether this partnership becomes a durable foundation or a singular moment will depend on execution across technology, business, and timing. AMD has tied a meaningful portion of its datacenter growth story to OpenAI’s trajectory, while still maintaining the ambition to serve a broader market. The outcome will test whether a company built on diversification can thrive in an era of concentrated AI demand - and whether collaboration with one of the most powerful AI entities in the world will simply expand AMD’s ecosystem or define it.

Related Links

Fascinating analysis, Dr. Cutress — especially how you frame compute as a financial asset class. I’m curious about the human and geopolitical layer of this deal: with such massive U.S.-based power requirements, TSMC dependencies, and ongoing export controls shaping AI hardware flow, how do you see those forces influencing AMD’s ability to execute this six-gigawatt vision? Does the “financial alignment” model hold if geopolitics or grid infrastructure slow real deployment?

that was so quick, great article. were you aware of the structure of the real beforehand?